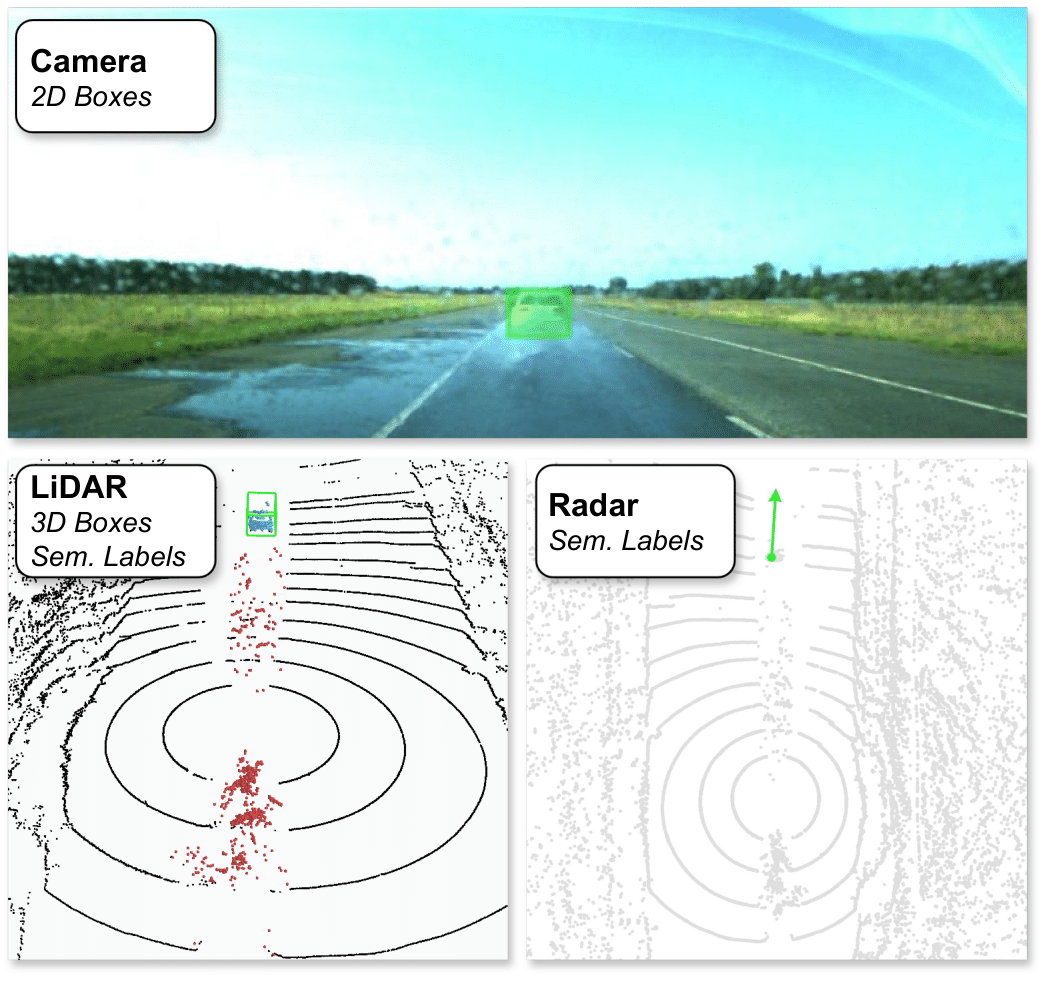

The following label types are provided:

- Camera:

2D Boxes - LiDAR:

3D Boxes,Semantic Labels - Radar:

Semantic Labels

velocity: 130km/h

velocity: 120km/h

velocity: 110km/h

velocity: 100km/h

2D Boxes3D Boxes, Semantic LabelsSemantic Labels

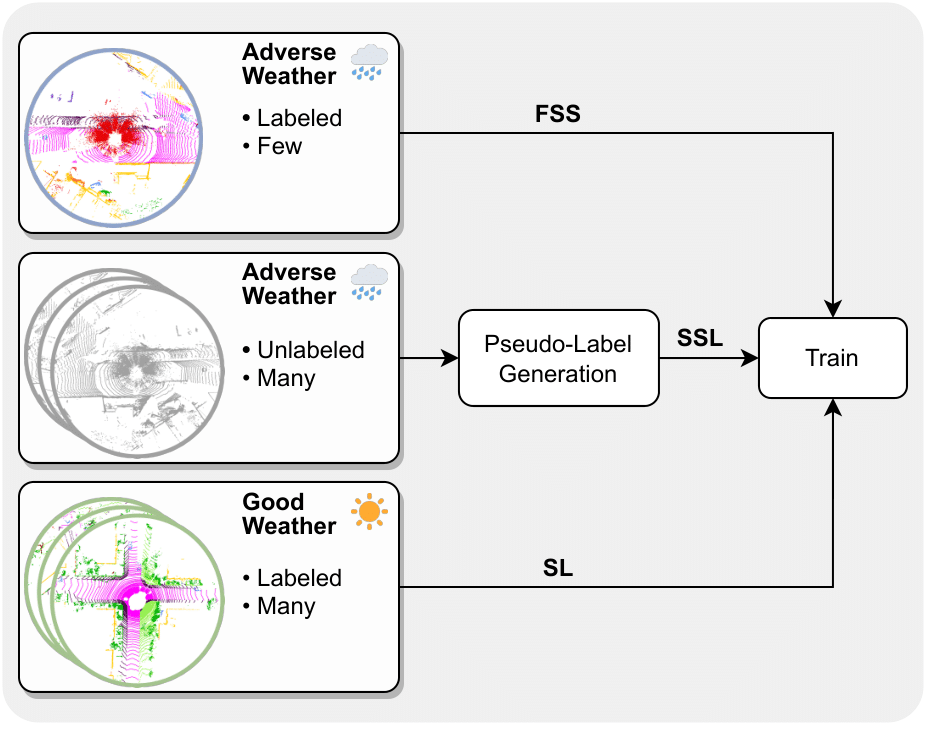

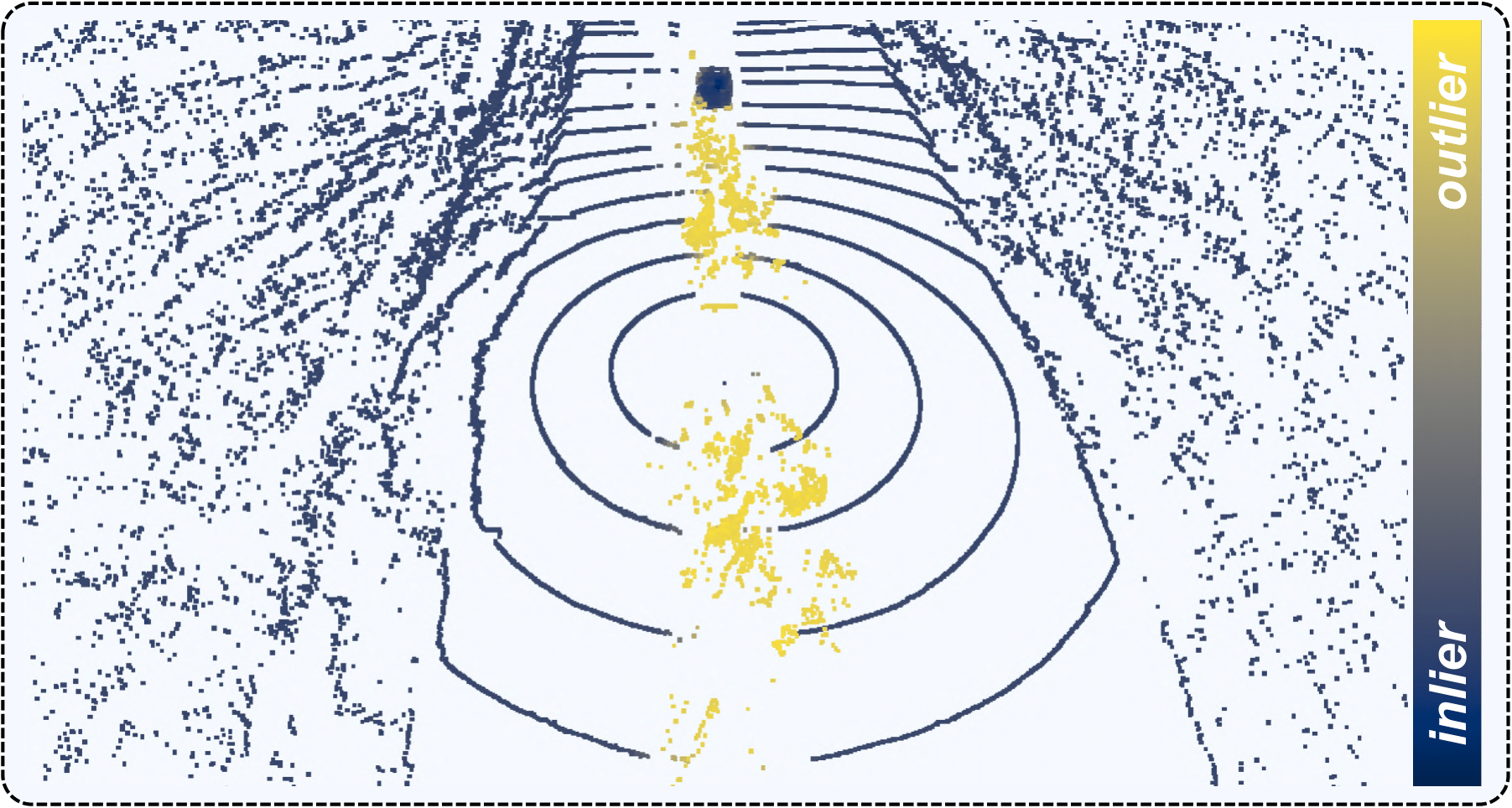

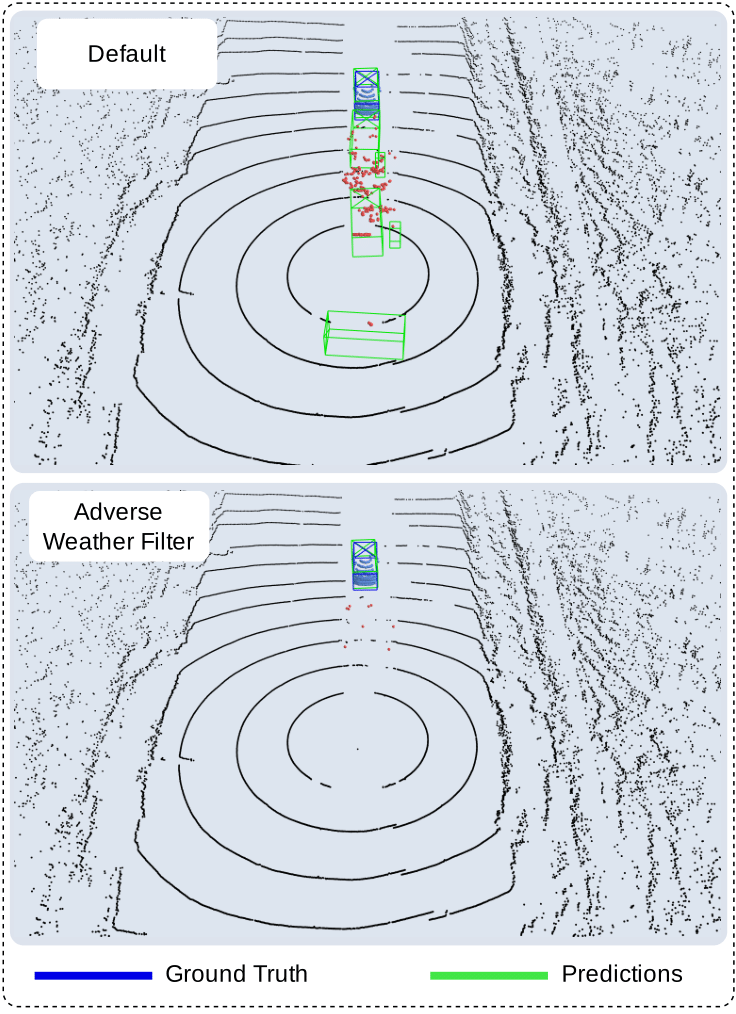

Autonomous vehicles rely on camera, LiDAR, and radar sensors to navigate the environment. Adverse weather conditions like snow, rain, and fog are known to be problematic for both camera and LiDAR-based perception systems. Currently, it is difficult to evaluate the performance of these methods due to the lack of publicly available datasets containing multimodal labeled data. To address this limitation, we propose the SemanticSpray++ dataset, which provides labels for camera, LiDAR, and radar data of highway-like scenarios in wet surface conditions. In particular, we provide 2D bounding boxes for the camera image, 3D bounding boxes for the LiDAR point cloud, and semantic labels for the radar targets. By labeling all three sensor modalities, the SemanticSpray++ dataset offers a comprehensive test bed for analyzing the performance of different perception methods when vehicles travel on wet surface conditions.

git clone https://github.com/uulm-mrm/semantic_spray_dataset.gitbash download.sh

├── data

│ ├── Crafter_dynamic

│ │ ├── 0000_2021-09-08-14-36-56_0

│ │ │ ├── image_2

│ │ │ │ ├── 000000.jpg

│ │ │ │ └── ....

│ │ │ ├── delphi_radar

│ │ │ │ ├── 000000.bin

│ │ │ │ └── ....

│ │ │ ├── ibeo_front

│ │ │ │ ├── 000000.bin

│ │ │ │ └── ....

│ │ │ ├── ibeo_rear

│ │ │ │ ├── 000000.bin

│ │ │ │ └── ....

│ │ │ ├── labels

│ │ │ │ ├── 000000.label

│ │ │ │ └── ....

│ │ │ ├── radar_labels

│ │ │ │ ├── 000000.npy

│ │ │ │ └── ....

│ │ │ ├── object_labels

│ │ │ │ ├── camera

│ │ │ │ │ ├── 000000.json

│ │ │ │ │ └── ....

│ │ │ │ ├── lidar

│ │ │ │ │ ├── 000000.json

│ │ │ │ │ └── ....

│ │ │ ├── velodyne

│ │ │ │ ├── 000000.bin

│ │ │ │ └── ....

│ │ │ ├── poses.txt

│ │ │ ├── metadata.txt

│ ├── Golf_dynamic

│ ...

├── ImageSets

│ ├── test.txt

│ └── train.txt

├── ImageSets++

│ └── test.txt

└── README.txt

In the following GitHub repository, we provide python code for loading and visualizing the dataset.

@article{10143263,

author = {Piroli, Aldi and Dallabetta, Vinzenz and Kopp, Johannes and Walessa, Marc and Meissner, Daniel and Dietmayer, Klaus},

journal = {IEEE Robotics and Automation Letters},

title = {Energy-Based Detection of Adverse Weather Effects in LiDAR Data},

year = {2023},

volume = {8},

number = {7},

pages = {4322-4329},

doi = {10.1109/LRA.2023.3282382}

}

@misc{https://tudatalib.ulb.tu-darmstadt.de/handle/tudatalib/3537,

url = { https://tudatalib.ulb.tu-darmstadt.de/handle/tudatalib/3537 },

author = { Linnhoff, Clemens and Elster, Lukas and Rosenberger, Philipp and Winner, Hermann },

doi = { 10.48328/tudatalib-930 },

keywords = { Automated Driving, Lidar, Radar, Spray, Weather, Perception, Simulation, 407-04 Verkehrs- und Transportsysteme, Intelligenter und automatisierter Verkehr, 380 },

publisher = { Technical University of Darmstadt },

year = { 2022-04 },

copyright = { Creative Commons Attribution 4.0 },

title = { Road Spray in Lidar and Radar Data for Individual Moving Objects }

}